I’m afraid I’m not as pleased with this month’s entry as I had hoped to be. The instrument I developed worked fairly well on the Organelle, but when I used it in combination with a wind controller, it was not nearly as expressive as I had hoped. I had also hoped to use the EYESY with a webcam, but I was not able to get the EYESY to recognize either of my two USB webcams. That being said, I think the instrument I designed is a good starting point for further development.

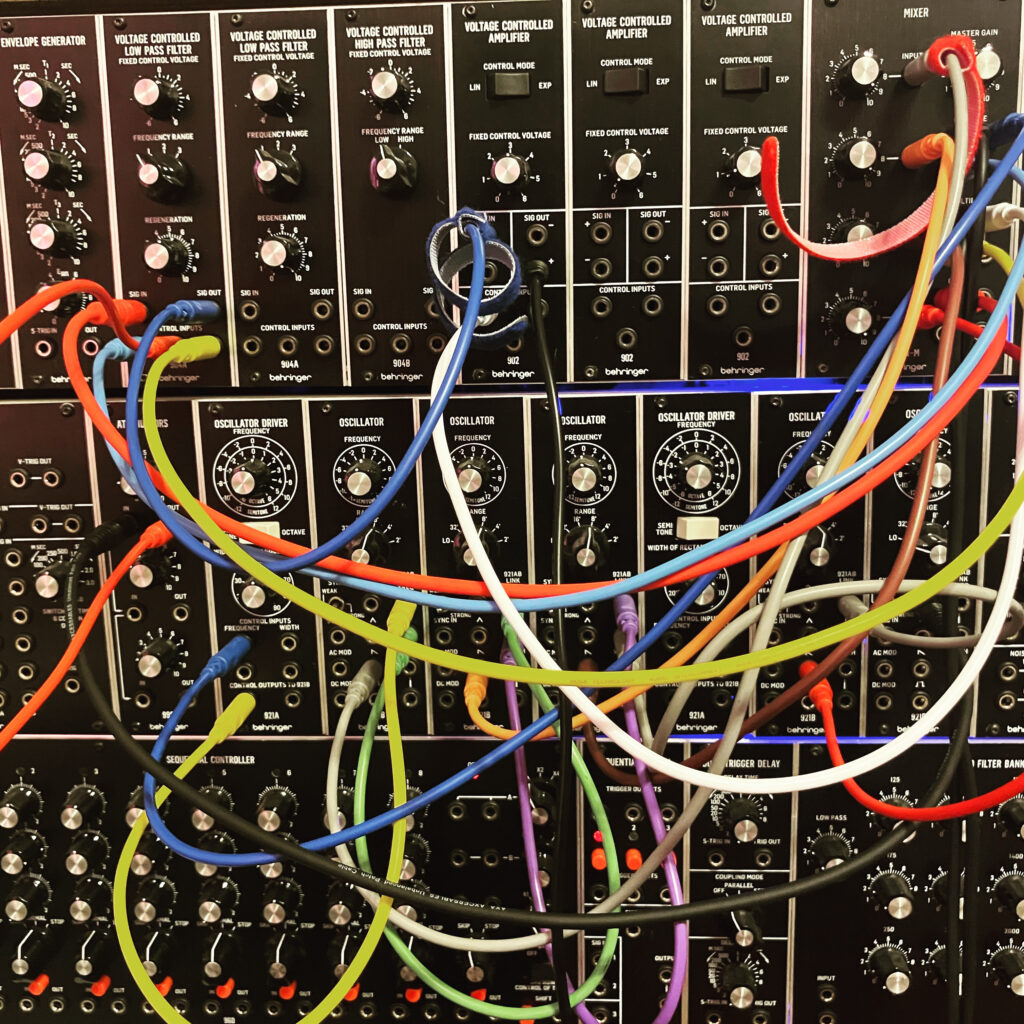

The instrument I designed, Additive Odd Even, is an eight-voice additive synthesizer. Additive synthesis, as the name implies, is an alternative approach to subtractive synthesis. Subtractive synthesis was the most common approach for the first decades of synthesis, as it requires the fewest / least expensive components in comparison to most other approaches. Subtractive synthesis involves taking periodic waveforms that have rich harmonic content, and using filters to subtract some of that content to create new sounds.

Additive synthesis was theorized by rarely attempted since the beginning of sound synthesis. Technically speaking the early behemoth, the Teleharmonium, used additive synthesis. Likewise, earlier electronic organs often used some variant of additive synthesis. One of the few true early additive synthesizers was the Harmonic Tone Generator. However, this instrument’s creator, James Beauchamp only made two of them.

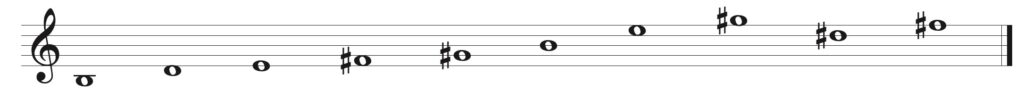

Regardless, additive synthesis involves adding pure sine tones together to create more complex waveforms. In typical early synthesizers, this was impractical, as it would require numerous expensive oscillators in order to accomplish this approach. As a point of reference, the Harmonic Tone Generator only used six partials.

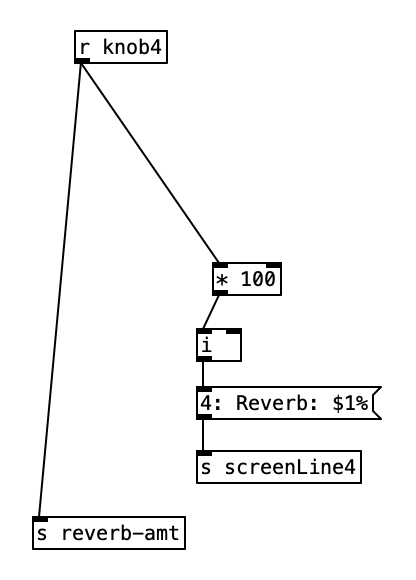

Additive Odd Even is based upon Polyphonic Additive Synth by user wet-noodle. In my patch, knob one controls the transposition level, allowing the user to raise or lower the pitch chromatically up to two octaves in either direction. The second knob controls the balance of odd versus even partials. When this knob is in the middle, the user will get a sawtooth wave, and when it is turned all the way to the left, a square wave will result. Knob three controls both the attack and release, which are defined in terms of milliseconds, ranging from 0 to 5 seconds. The final knob controls the amount of additive synthesis applied, yielding a multiplication value of 0 to 1. This last knob is the one that is controlled by the amount of breath pressure from the WARBL. Thus, in theory, as more breath pressure is supplied, we should hear more overtones.

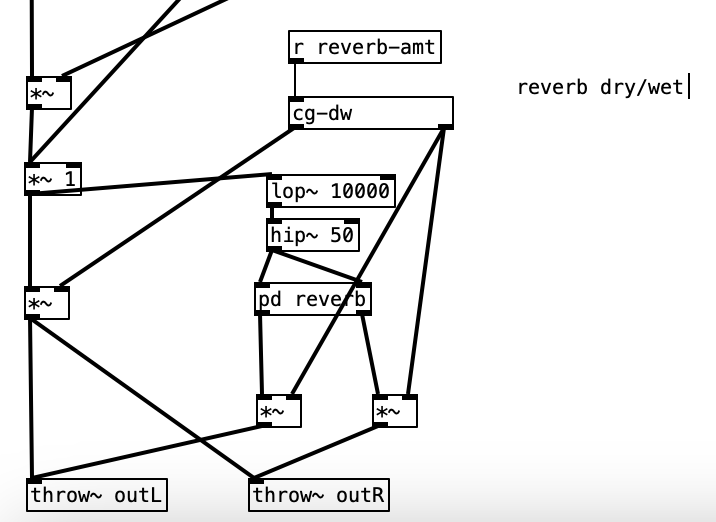

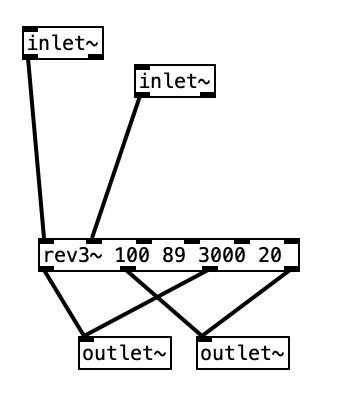

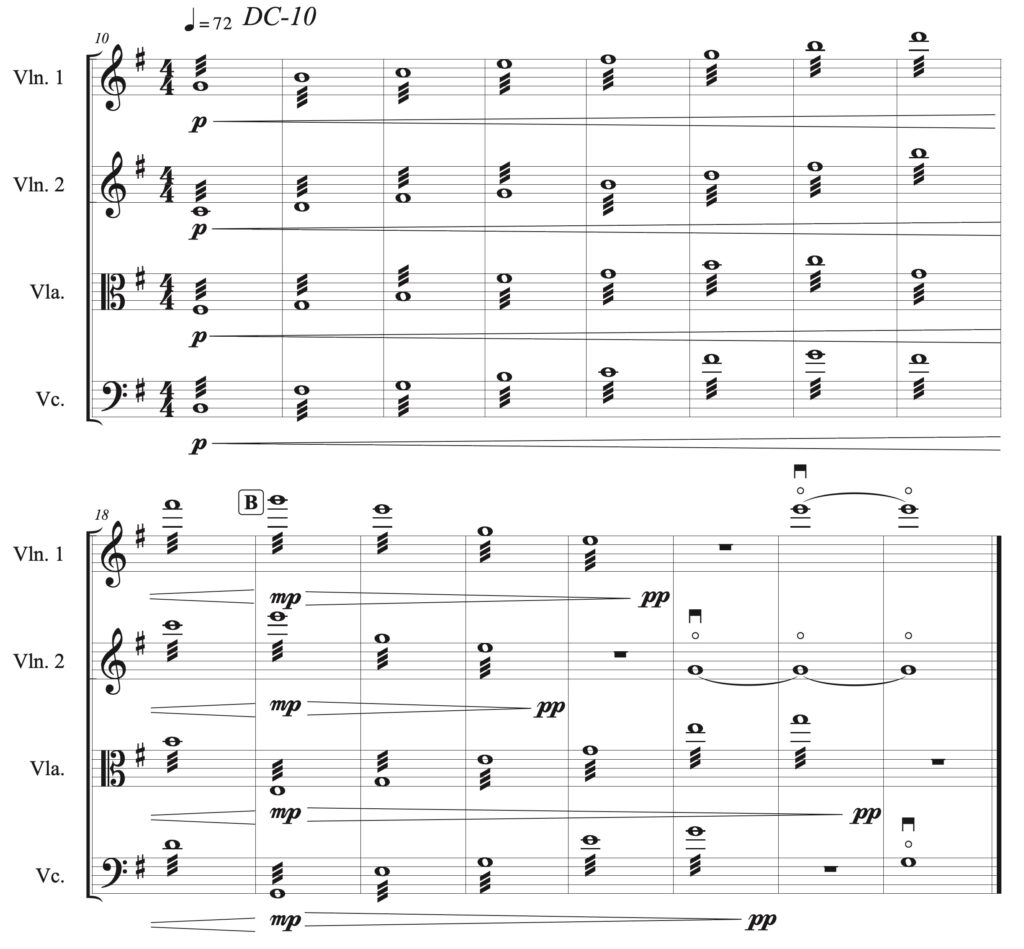

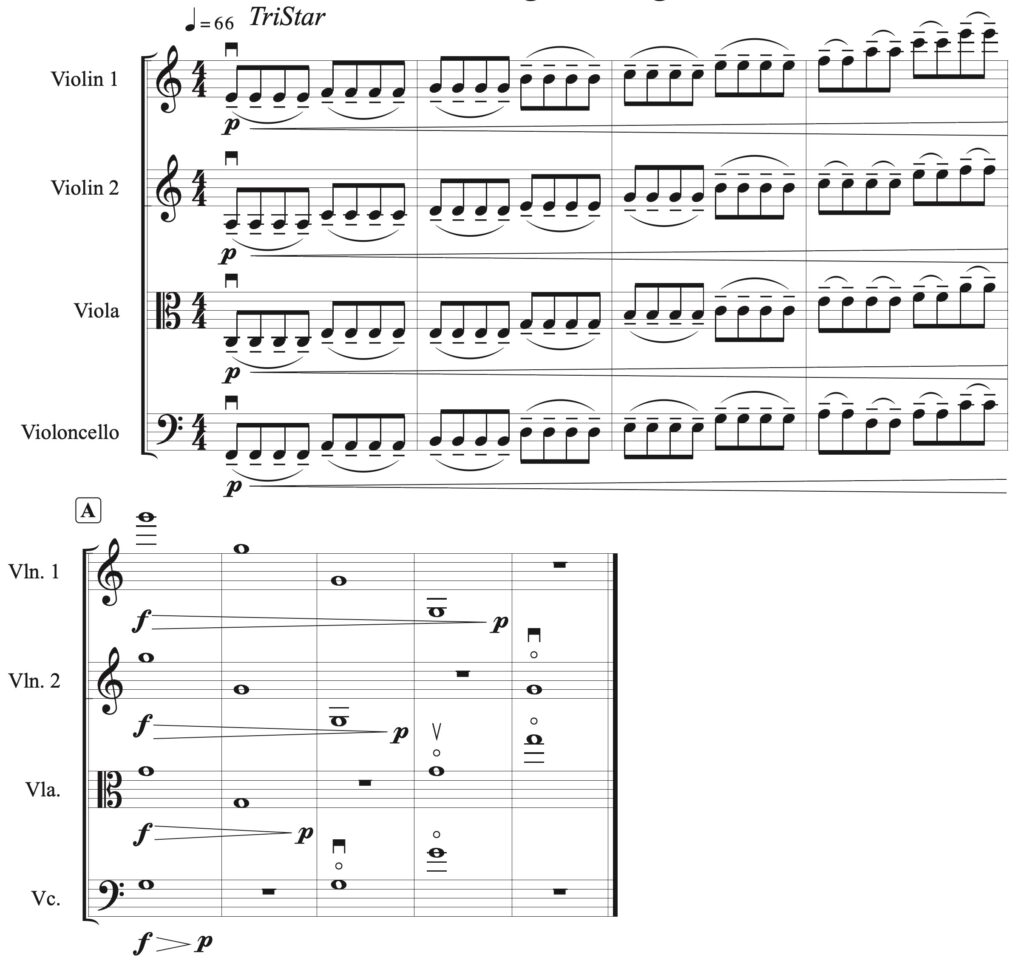

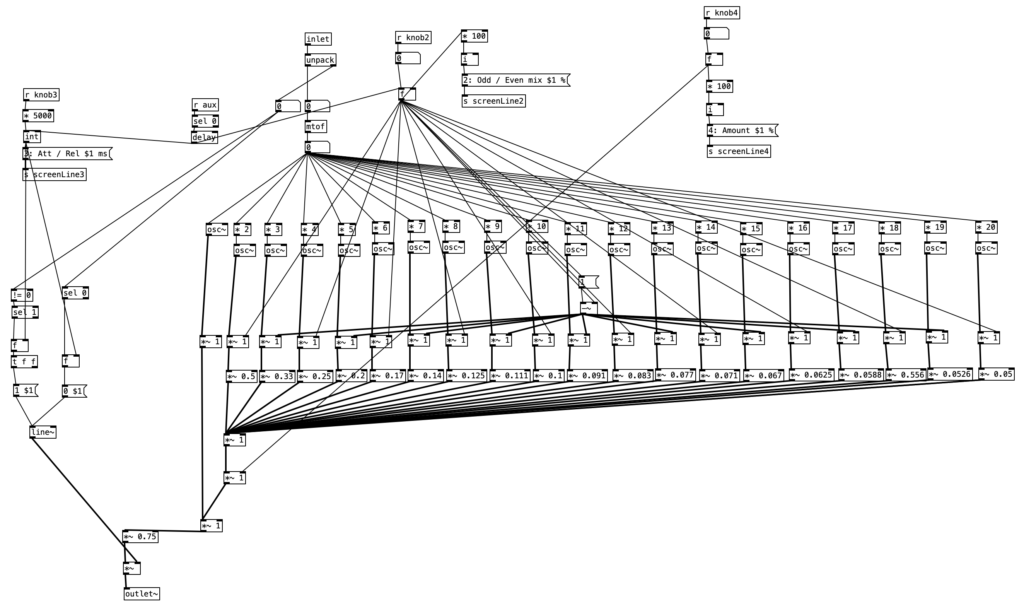

This instrument consists only of a main routine (main.pd) and one abstraction (voice.pd). Knob one is controlled in the main routine, while the rest exist in the abstraction. As we can see below, voice.pd contains 20 oscillators, which in turn provide 20 harmonic partials for the sound. We can see this in the way in which the frequency of each successive oscillator is multiplied by integers 1 through 20. A bit below these oscillators, we see that the amplitudes of these oscillators is multiplied by successively smaller values from 1 down to .05. These values roughly correspond to 1/n, where n is the harmonic value. Summing these values together would result in a sawtooth waveform.

We see more multiplication / scaling above these values. Half of them come directly from knob 2, which controls the odd / even mix. These are used to scale only the even numbered partials. Thus, when the second knob is turned all the way to the left, the result is 0, which effectively turns off all the even partials. This results in only the odd partials being passed through, yielding a square waveform. The odd numbered partials are scaled using 1 minus the value from the second knob. Accordingly, when knob 2 is placed in the center position, the balance between the odd and even partials should be the same, yielding a sawtooth wave. Once all but the fundamental is scaled by knobs 2 & 4, they are mixed together, and mixed with the fundamental. Thus, we can see that neither knob 2 nor 4 affects the amplitude of thefundamental partial. This waveform is then scaled by .75 to avoid distortion, and then scaled by the envelope, provided by knob three.

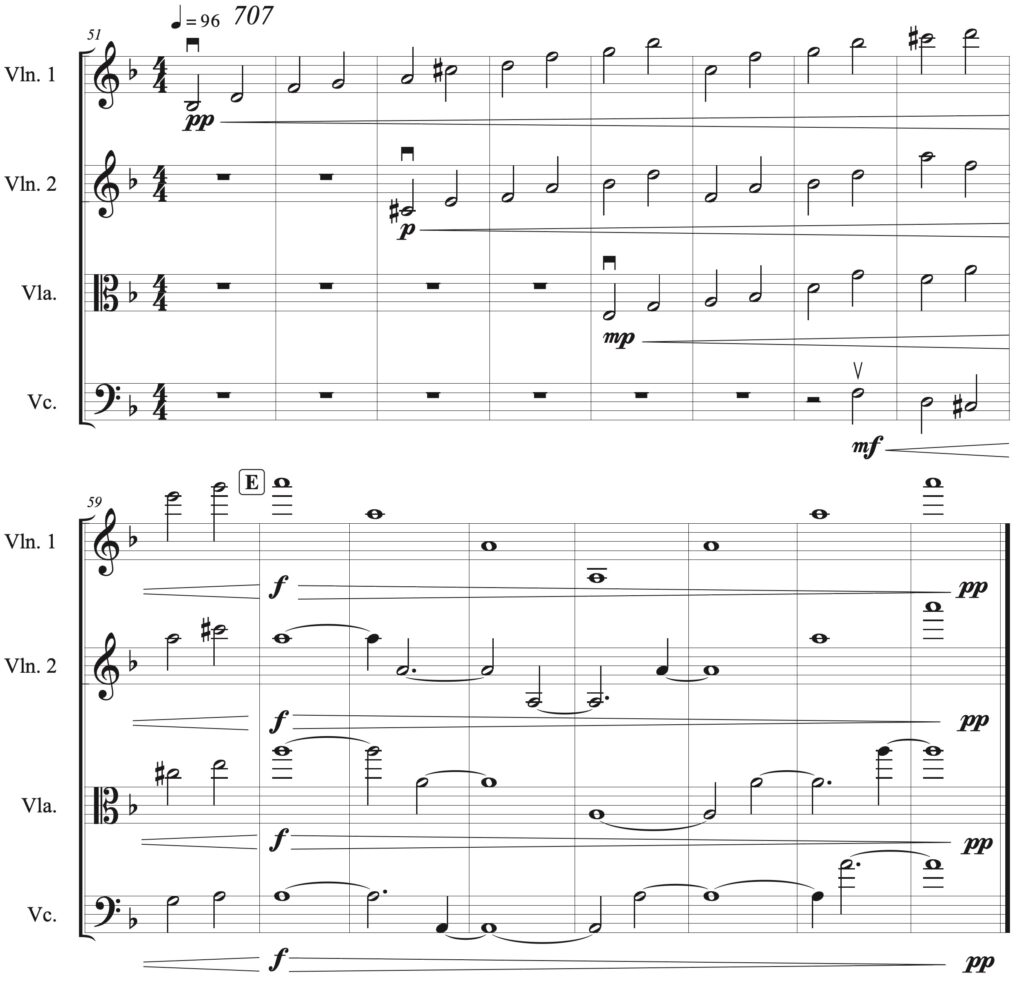

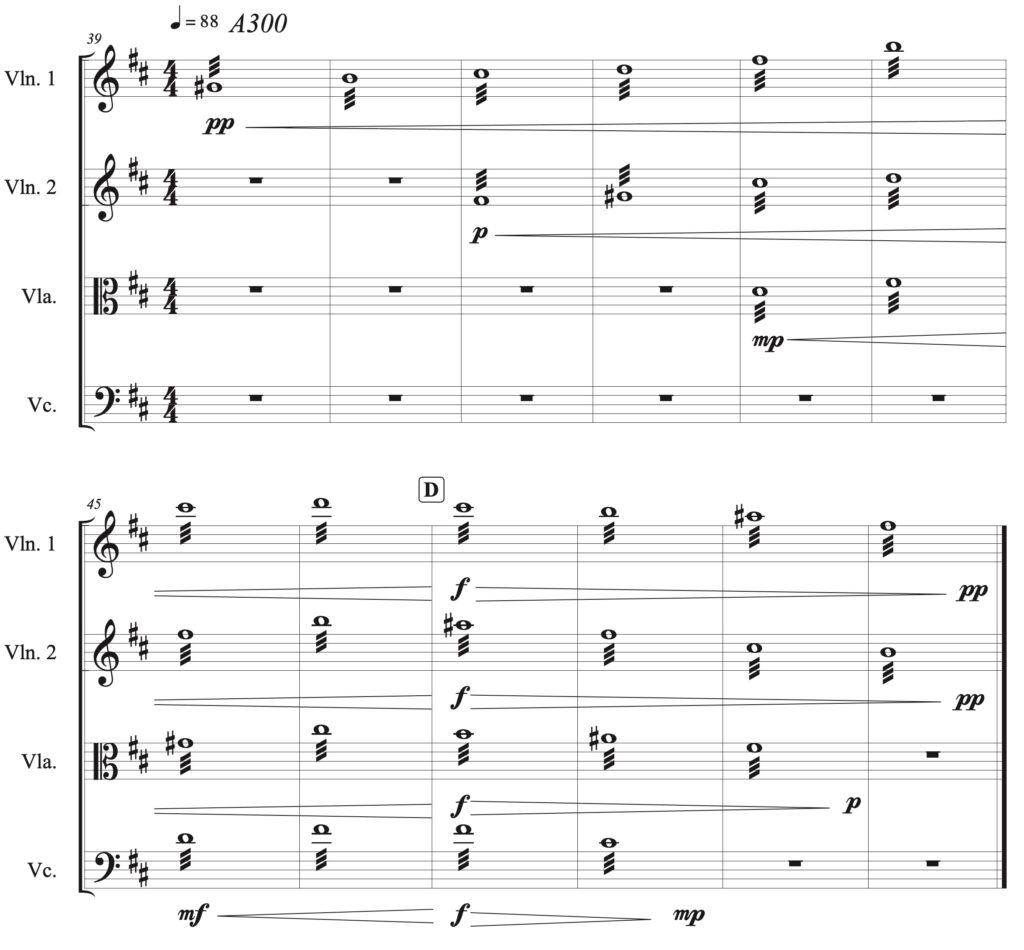

In August I had about one month of data loss. Accordingly, I lost much of the work I did on the PureData file that I used to generate the accompaniment for Experiment 5. Fortunately I had the blog entry for that experiment to help me reconstruct that program. I also added a third meter, 7/8, in addition to the two meters used in Experiment 5 (4/4 and 3/4). Most of the work to add this is adding a bunch of arrays, and continuing the expansion of the algorithm that was already covered in the blog entry for Experiment 5.

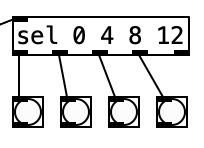

That being said, using an asymmetrical meter such as 7/8 creates a challenge for the visual metronome I added in experiment 5. Previously I was able to put a select statement, sel 0 4 8 12 that comes from the counter that tracks the sixteenth notes in a given meter. I could then connect each of the four leftmost outlets of that sel statement to a bang. Thus, each bang would activate in turn when you reach a downbeat (once every 4 sixteenth notes).

However, asymmetrical meters will not allow this to work. As the name suggests, in such meters the beat length is inconsistent. That is there are beats that are longer, and ones that are shorter. The most typical way to count 7/8 is to group the eighth notes with a group of 3 at the beginning, and to have two groups of 2 eighths at the end of the measure. This results in a long first beat (of 3 eighths or 6 sixteenth notes), followed by two short beats (of 2 eighths or 4 sixteenth notes).

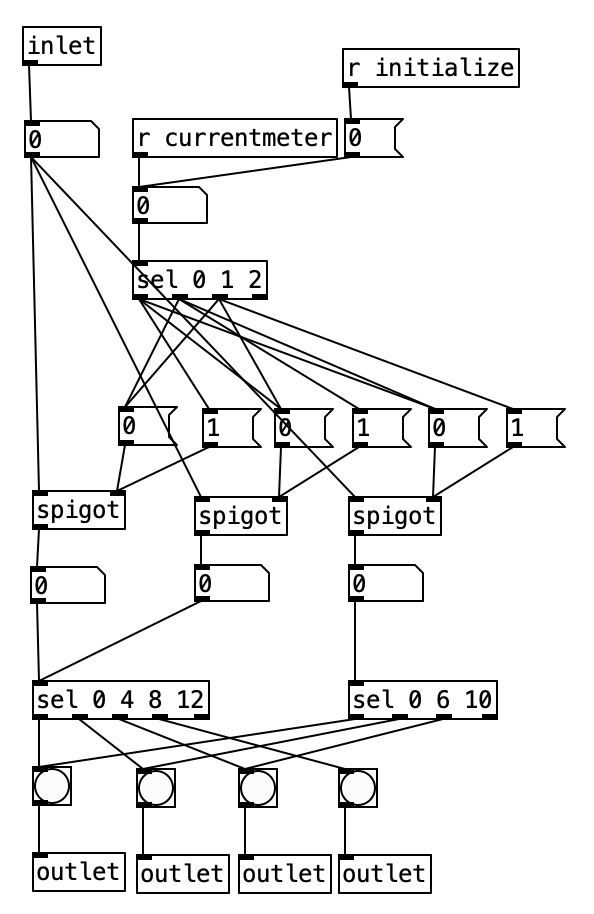

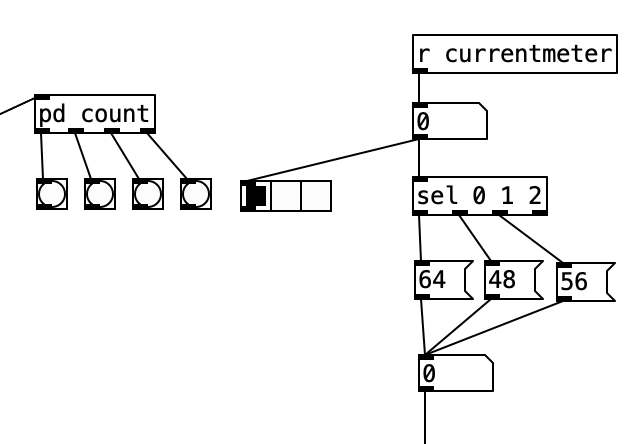

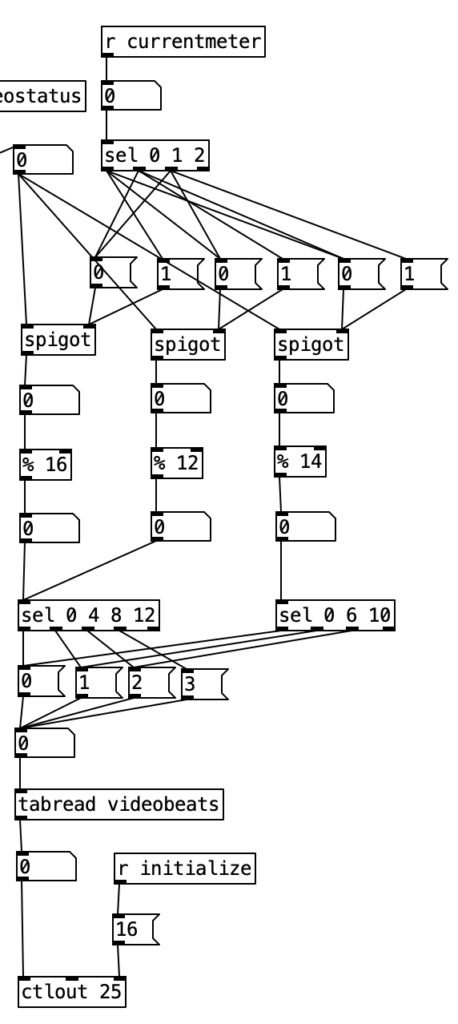

Accordingly, I created a new subroutine called pd count, which routes the sixteenth note count within the current measure based upon the current meter. Here we see that the value of currentmeter or a 0 sent by initialize is sent to a select statement that is used to turn on one of three spigots, and shut off the others. The stream is then sent to one of two select statements that identify when downbeats occur. Since both 4/4 an 3/4 use beats that are 4 sixteenth notes long, both of those meters can be sent to the same select statement. The other sel statement, sel 0 6 10, corresponds to 7/8. The second beat does not occur until the sixth sixteenth note, while the final downbeat occurs 4 sixteenth notes later at count 10.

One novel aspect of this subroutine is that it has multiple outlets. Each outlet is fed a bang. Each outlet of the subroutine is sent to a different bang, so the user can see the beats happen in real time. Note that this is next to a horizontal radio button, which displays the current meter. Thus, the user can use this to read both the meter, and which beat number is active.

I had to essentially recreate the code inside pd count inside of pd videoautomation in order to change the value of knob 5 of the EYESY on each downbeat. Here the output from the select statements are sent to messages of 0 through 3, which correspond to beats 1 through 4. These values are then used as indexes to access values stored in the array videobeats.

I did not progress with my work on the EYESY during this experiment, as I had intended to use the EYESY in conjunction with a webcam, but unfortunately I could not get the EYESY to recognize either of my two USB webcams. I did learn that you can send MIDI program changes to the EYESY to select which scene or program to make use of. However, I did not incorporate that knowledge into my work.

One interesting aspect of the EYESY related to program changes is that it runs every program loaded onto the EYESY simultaneously. This allows seamless changes from one algorithm to another in real time. There is no need for a new program to be loaded. This operational aspect of the EYESY requires the programs be written as efficiently as possible, and Critter and Guitari recommends loading no more than 10MB of program files onto the EYESY at any given time so the operation does not slow down.

As stated earlier, I was disappointed in the lack of expression of the Additive Odd Even patch when controlled by the WARBL. Again, I need to practice my EVI fingering. I am not quite use to reading the current meter and beat information off of the PureData screen, but with some practice, I think I can handle it. While the programming changes for adding 7/8 to the program that generates the accompaniment is not much of a conceptual leap from the work I did for Experiment 5, it is a decent musical step forward.

Next month I hope to make a basic sample instrument for the Organelle. I will likely add another meter to the algorithm that generates accompaniment. While I’m not quite sure what I’ll do with the EYESY, I do hope to move forward with my work on it.