The good news is that the Hypno features robust MIDI implementation. The bad news is that it may not be well implemented. The MIDI specification allows for 127 different continuous controllers, which could do things like changing the volume or panning of a sound. The Hypno makes use of 50 of these, which is far more than most MIDI devices that I am used to. In addition, there are 10 MIDI notes that are used to act as triggers for the Hypno.

I came up with an algorithm in Pure Data that makes use of 35 of the controller values, and one of the triggers. This is where the bad news comes in. When I run the algorithm there are unpredictable results. Namely two of the triggers I do not use are the triggers that change the shape of oscillators A and B. When I run the algorithm those oscillator shapes often change without being directed to do so.

I spent a lot of time troubleshooting the algorithm, including recording the MIDI data in Logic Pro (see below). The MIDI data is coming out of Pure Data exactly as expected. When I cut the algorithm back to sending just one set of values (for instance, the note trigger or one of the controller values), the Hypno responds as predicted, however, sometimes just adding in a second controller, the Hypno begins to change oscillator shapes without being prompted to. It may also be changing other values, that I am simply not able to track. My working theory here is that the Hypno cannot handle a rapid MIDI input of several controller values.

In fact, it was this problem, which I wasn’t able to solve in March that caused me to back burner my work on this project until the summer. Unfortunately the extra time and brain space afforded me by the summer did not yield different results. So, I decided to move forward with the algorithm as it is. My intent with this project was to have both oscillators using the video input shape from stored videos on a USB thumb drive (in this case, video from the previous two experiments, that is video from the final two tracks of Point Nemo). So, in practice, while I was recording the video output from the Hypno, whenever either oscillator shape changed, I changed it back to video input as quickly as I could. Accordingly, this resulted in a bit more chaos to an already chaotic video.

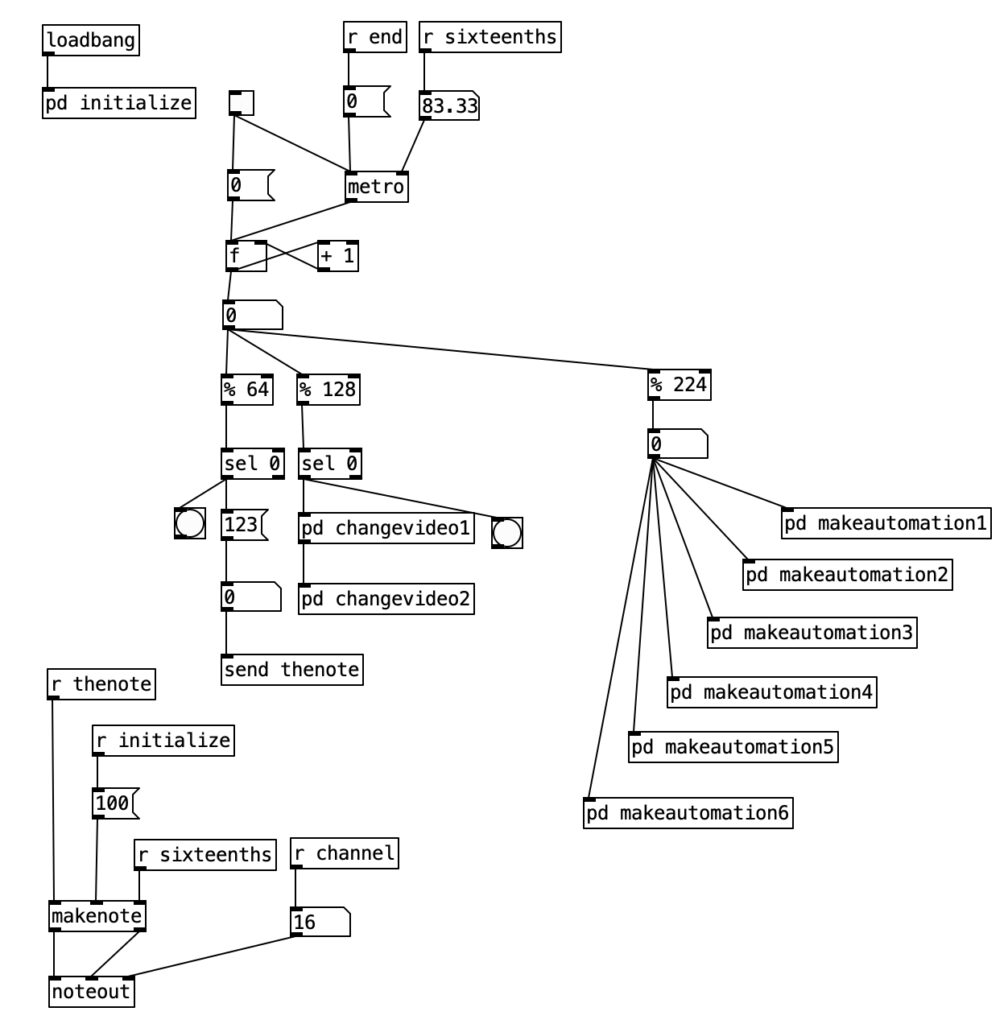

Alright, so let’s look at the algorithm in Pure Data. We see at the bottom left the part of the algorithm that sends the MIDI note out (it ends with a noteout object). In the upper left with see the initialize subroutine, which includes translating the tempo (180 bpm) into an amount of milliseconds. It also includes the initialization of an array called parameters. Finally, it also sets the MIDI channel to channel 16 (the Hypno is locked into channel 16, and it cannot be set to other MIDI channels). In the center of the screen, we see the primary algorithm. It includes a metronome, and a counter right below that. Then beneath the number object below the counter we see three different branches, one ending in a send object, the second ending in two subroutines labeled changevideo, and the final ending in six different subroutines labeled makeautomation.

We have seen various versions of all of these subroutines before. The first, which terminates in [send thenote] simply sends MIDI note number 123 to the Hypno once every four measures (16 sixteenth notes multiplied by four measures equals 64). This is the note number that toggles the feedback mode of the Hypno. The changevideo subroutines occur under a % 128 object. This causes these subroutines to execute once every eight measures (two times 64). The subroutine changevideo2 is similar as changevideo1, so let’s look at changevideo1.

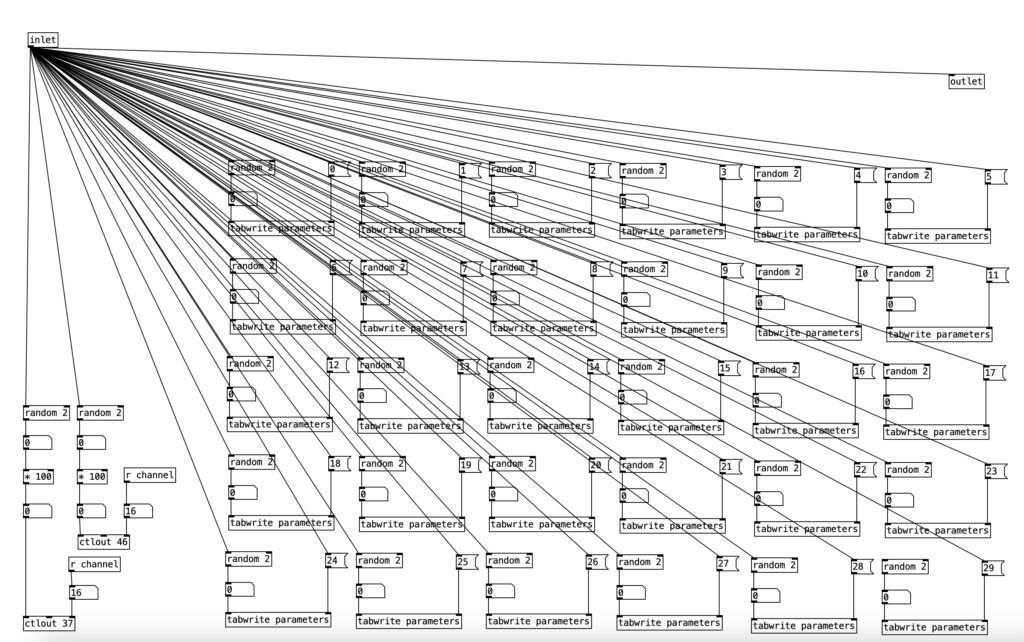

The bulk of this subroutine is 30 instances of a random 2 object followed by a tabwrite parameter object. What this means is that for each of 30 parameters, which will map onto controller values sent to the Hypno, there is a fifty percent chance for that parameter to be set to either change or not change once every eight measures. The changevideo2 subroutine simlply contains the remaining five instances of the parameters.

On the left we see two random 2 objects followed by a * 100 object. In each case, this will result either in the number 0 or the number 100 being output. These are then sent to ctlout 37 or ctlout 46. These are the controller values that set the file index number for oscillators A and B respectively. To put it in other terms, this will cause the video input to toggle between the two video files stored on the USB thumb drive.

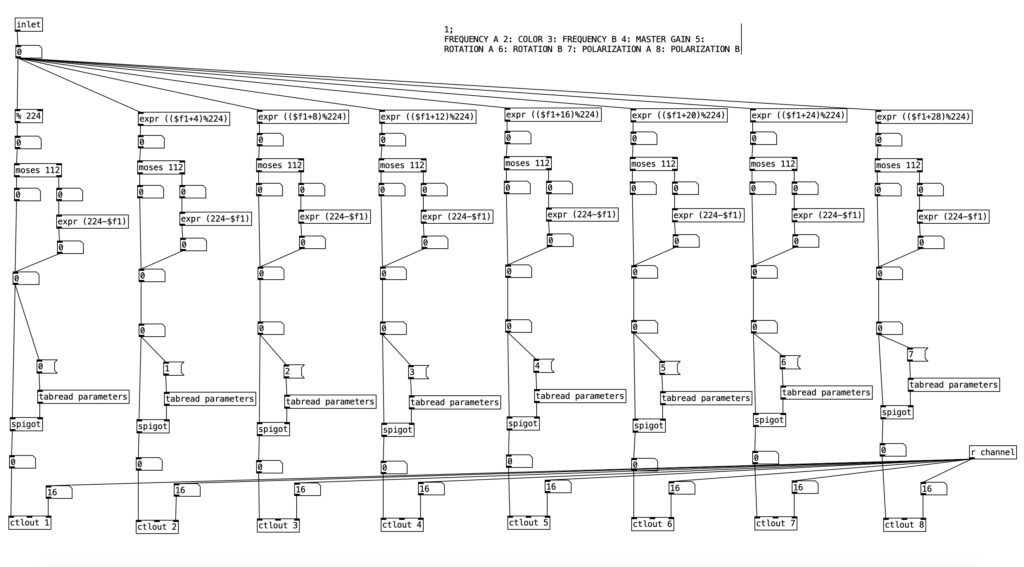

The six makeautomation subroutines are mostly similar to each other, so we’ll look at makeautomation1. This subroutine features eight instances of the same thing. It starts with some version of a mod statement (% 224) or a similar expr object. The number 224 corresponds to 14 measures of music. If we halve that to seven measures the number of sixteenth notes is 112. So why am I dealing with seven minute units here? MIDI controller values range between zero and 127. The number 112 is the largest multiple of 16 that is still less than 127. Each successive expr object increments the incoming value by four before modding the value. This is done so that parameters are not set to the same value.

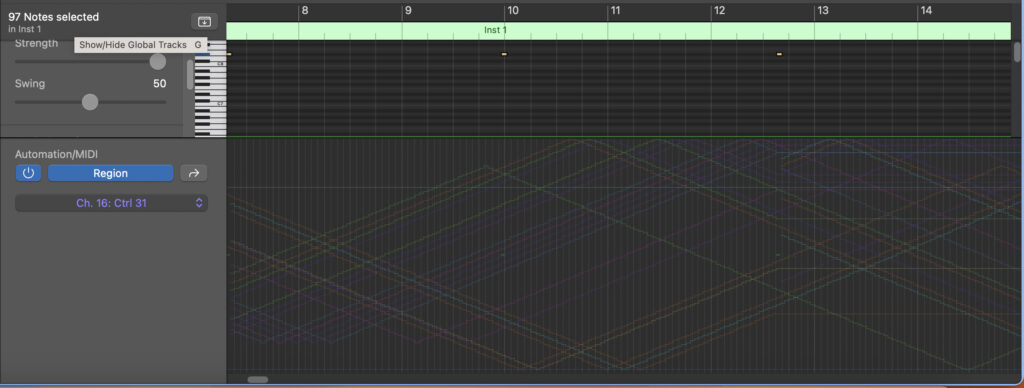

After the mod or expr object we see a moses object. When the value coming into the left inlet is below the stated value, in his case 112, that value is passed to the left outlet. If it is equal to or above that value, it passes out of the right outlet. This allows us to insert an expr object that subtracts the value from 224. Thus, this will create a motion from 112 down to 0. Thus, what this subroutine does is create incremental parameter changes that result in triangle wave forms. This can be confirmed by looking at the recorded MIDI data in Logic Pro.

After this the value then passes through a spigot object. Here the spigot is being controlled by a value from the parameters array. It that value is 0, the value going into the left inlet does not pass through. When that value is 1, the value at the left inlet will be passed to the outlet. Accordingly, we can see how the subroutine changevideo turns these spigots on and off. The values are then sent to the ctrl objects below.

The subroutine makeautomation1 handles the controllers for the color and the master gain. It also controls the values for frequency, rotation and polarization for both oscillators. The subroutine makeautomation2 handles controllers for oscillator A, including drift, color offset, saturation, fractal axis, and fractal amount. The subroutine makeautomation3 handles the same parameters as the previous subroutine, but for oscillator B. The subroutine makeautomation4 handles values related to feedback including: feedback hue shift, feedback zoom, feedback rotation, feedback X axis, and feedback Y axis. It also handles the controllers for feedback to gain for both oscillators. The subroutine makeautomation5 handles parameters for video manipulation of oscillator A, including X crop, lumakey, Y crop, lumakey max, and aspect A. The final makeautomation subroutine handles the same parameters as the previous subroutine, but for oscillator B.

As an experiment, this video was a success in the sense that it answered the question, can the Hypno handle a lot of MIDI input of controller values. That answer is clearly no. However, while the Hypno did not respond as directed by the algorithm in Pure Data, I cannot say that the results are not satisfying, though they are a considerable leap more chaotic that the results of the previous two experiments. I will be doing one more experiment with driving the Hypno using MIDI data, and will likely continue to use MIDI data when performing with the Hypno. However, when making music videos I will likely use Eurorack control voltages to drive the Hypno, as the results are far more predictable and controllable.

Part of this experiment was to see the outer limits of using MIDI with the Hypno. As already noted, this yielded fairly chaotic results where the source material is almost completely unrecognizable. In practice it seems that I will get more aesthetically pleasing results if I rein in the controller changes, yielding a more subtle response.