It was a fairly short jump from my previous experiment to this one. For the music video for 21AC3627AB4, I focused on using constant, smooth changes of selected parameters to manipulate video footage using the Sleepy Circuits Hypno. For this experiment, I wanted to focus on abrupt parameter changes in order to create a sense of visual rhythm. In order to achieve this, there were very few changes I had to make to the Pure Data algorithm I used to control the Hypno.

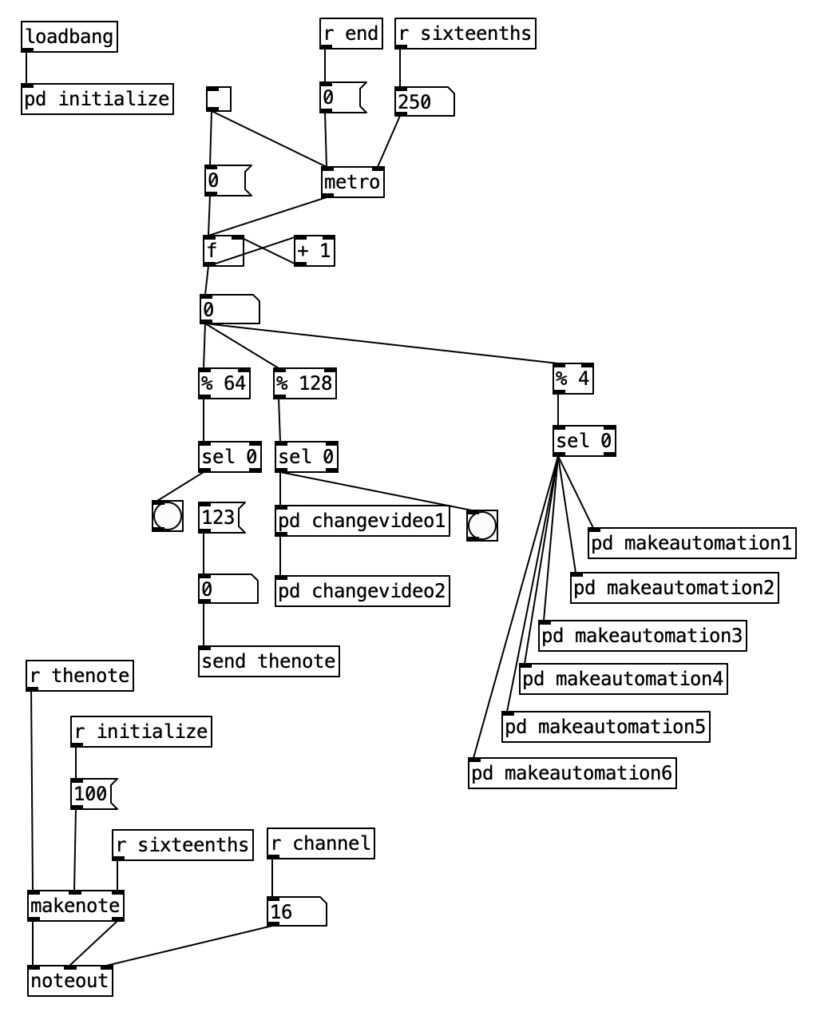

The overall algorithm for creating the video for 5B4C31 is nearly identical to the algorithm for creating the video for 21AC3627AB4. The right hand branch off of the counter contains a mod (%) four object followed by a sel 0 object. This causes the subroutines below to execute once every four sixteenth notes, or to put it another way, once every beat.

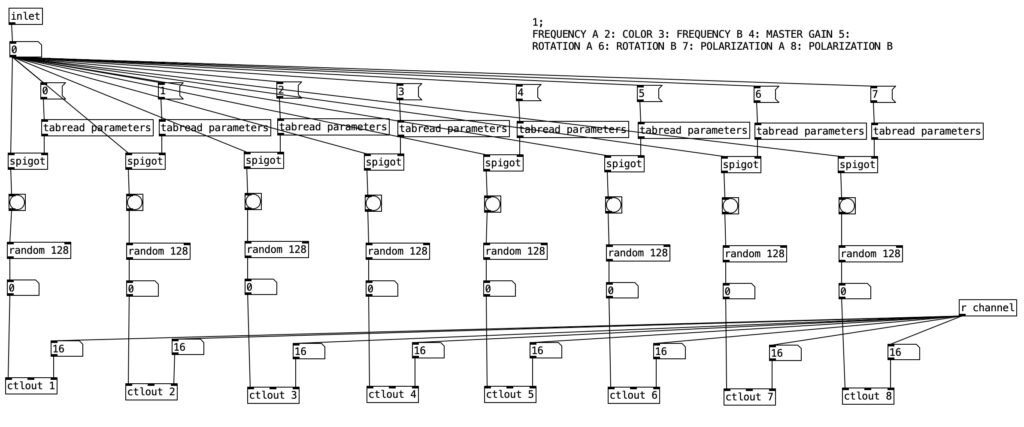

As was the case with the previous experiment, each of the makeautomation subroutines is largely the same, so we’ll look at the first one. This version of makeautomation is far simpler than the one used in the previous experiment. This subroutine is designed to send controller values for the first eight parameters used in the experiment. Each column is largely identical to the rest of the columns. The spigot statement controls whether or not a bang is sent to the random 128 objects below. When the value stored in parameters is zero the bang does not occur, when it is one the bang happens. The random 128 object outputs a value between 0 and 127 inclusive. This takes advantage of the full spectrum available within MIDI specifications. This value is then sent to the Hypno using a ctlout object.

The video input used for this experiment was the video for the next two tracks on Point Nemo, namely 21AC3627AB4 and 87ED21857E5. The video for 21AC3627AB4 is more chaotic in nature than that of 5B4C31. With the abrupt changes, I had though that the latter video would be more chaotic. However, it is likely the tempo change (the former is at 180 beats per minute, while the latter is at 60 beats per minute), that is one of the main contributors to the somewhat calmer flow of 5B4C31.

The sudden changes and the slower tempo require far less MIDI data to be sent to the Hypno, which resulted in far fewer glitches in terms of settings changes that were not specified by the algorithm. This was definitely a big problem with the previous experiment. In fact, there was only one time where one of the oscillator changed shapes, which I manually put back on the video input shape.

I purposefully created the videos for Point Nemo in reverse order, as I wanted the videos to become more simple as the album progresses, having the source video, late afternoon sunlight glistening on waves, gradually reveal itself over a one hour experience. Given that I know what the source video is, I can occasionally see glimpses of it in the first two movements. That being said, there is a considerable step down in complexity between the second and third movement.

Another thing I discovered in this experiment was that many of the visual beats came out quite dark. This can be due to any number of factors, including bad settings for color, for gain, for frequency (zoom) and the like. In order to deal with this, I recorded twice as much video as I needed to, and then simply edited out the dark sections. This required a couple of hours of work, which while somewhat defeats the goal of creating a music video in real time, a couple of hours to create a music video is a fairly easy work load. For future endeavors, I could limit the range of values to avoid such poor settings, or I could come up with algorithms to reject certain results.

In fact, this experiment successful enough that I have devised a rough plan for creating music videos for my forthcoming album, Monstrum Pacificum Bellicosum, which is due out at the end of 2026. Accordingly, I intend to start working on the videos relatively soon. The first part will likely be to find footage to manipulate. The current plan is to find footage from public domain and silent films.

I am fairly pleased with the numbers of streams of the Point Nemo videos on YouTube (5B4C31: 15, 21AC3627AB4: 90, 87ED21857E5: 213, and 364F234F6231: 118), particularly considering that the first video has only been live for less than 24 hours. While those statistics may not seem earth shattering, with the exception of the stats for 5B4C31, these surpass the streams those pieces have had through bandcamp and distrokid, so the videos are definitely helping with the visibility / distribution of the music.

I am considering a public showing the Point Nemo at Stonehill College, and I definitely plan on doing a live concert using the Hypno. However, my elder cat has a lot of health care issues, and so I’m trying to figure out whether it would be better to schedule such events in the Fall or Spring semesters. I am also considering printing out selected individual frames from the Point Nemo videos, and treating them as 2D art pieces. Since I do plan on using the Hypno to create videos for Monstrum Pacificum Bellicosum, I plan on doing studio reports for my work on them, and treating them as part of my Digital Innovation Grant. That being said, it may be several months until I get to the point where I am ready to report on my work for Monstrum Pacificum Bellicosum.